Despite the uncanny ability of Large Language Models (LLMs) to almost convince us of personhood, they’ve been called “mouths without brains” capable of uttering “infinite fluent nonsense”1 by some experts. This is hardly an infliction limited only to artificially intelligent beings—small children are also extremely adept at infinite fluent nonsense as any parent or daycare teacher can testify. However, there seem to be fundamental differences between the generative mechanisms of these two varieties of intelligent beings.

The issue at core stems from the nature of these models which are essentially based on pattern-matching—given a textual prompt, the model outputs an appropriate reply or continuation of the text based on statistical likelihood, but seems to lack any real understanding of the subject at hand. This, in itself, is not so strange: text/language is essentially a representation of objects and notions that humans encounter as part of surviving in the world; and what the models have at hand is a representation – but no actual experience – of these objects and notions. If one is born congenitally blind and never has any encounter with the ideas of geometry, would it mean anything to be told that a car is shiny and more or less rectangular even if one could map the word car to its function? For a given object, is knowing the word enough? And how does one know anything?

Without going deep into epistemology, knowledge at its minimum can be defined as a set of verified common beliefs. Leaving aside Kant’s a priori knowledge2 for a moment, we can say that we begin to form these beliefs because our sense data coalesces around our understanding of these experiences. Critically, that sense data of different modalities correlates with our experience of the object and is corroborated by the experience of our peers. And that’s where LLMs have their limitation: an LLM can tell you a car is for travelling and is shiny and more or less rectangular, but cannot assign meaning to these words in any fundamental way. By meaning here we mean something very basic – that there is some model of understanding behind the representation of the word shiny. The LLM can even prattle on about all the other things that are also shiny and might as well be able to tell us that smooth things tend to be shiny, but the fundamental point is that these are words which map to specific human sensory experience—smooth is an expectation of how an object will feel to touch, shiny is how light will appear reflected off that surface. Is it possible for these words to mean anything in the absence of its sensory precedent? Does a congenitally blind baby, also devoid of all tactual sensation, come to assign any meaning to these words? In the absence of any other correlated sense data, I think this would be highly unlikely to be the case. In their lack of epistemological models, babies and LLMs are comparable. LLMs do not know what they know and how they come to know what they know, but even more importantly, they do not know what is it that they don’t know. Hence their tendency to confabulate and hallucinate. It’s similar to being in a dream-like state where one doesn’t have access to any means (or motivation) of validating reality. In contrast, starting from a purely sensory state3 of being, babies very quickly start to create and attach meaning to their sense data through interaction with other humans.

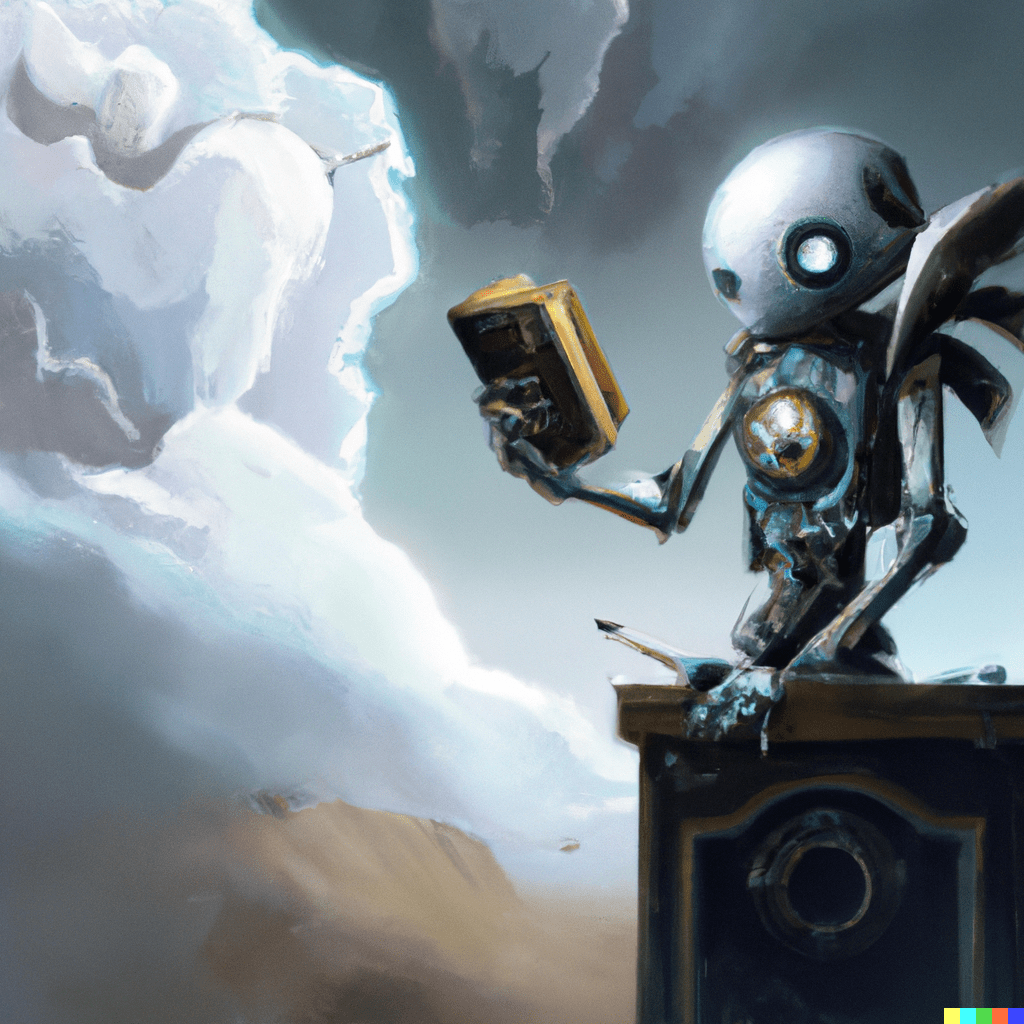

Embodiment, by design, allows one to receive correlated data of various modalities—seeing is supplemented by hearing and touch. Does that mean embodiment might be a necessary condition for artificial general intelligence? It might very well be, however, it may in principle be possible to provide correlated multimodal data without embodiment. In this sense it might be argued that generative models such as Dall.E and other latent diffusion models have a better model of epistemology than pure LLMs, as they are able to translate textual data about an object to a visual representation which coheres well with whatever meaning humans assign to it. In essence, these multimodal models appear to be able to just about tap at the core of communication: the shared construction of meaning.

Notes:

- The title is from Professor Sampo Pyysalo’s talk at the Silo AI seminar Generative AI and ChatGPT – possibilities and limitations.

- Most simply, it is knowledge independent of experience, but forms a necessary scaffold for further knowledge. An example is the notion of spacetime, which is never experienced in itself, but is required a priori for the rest of empirical knowledge (A Priori Justification and Knowledge).

- In this sense babies are on the other end of the spectrum than LLMs, having access to pure sensory data without any representation.

- The accompanying image has been generated by Dall.E 2 with the prompt: A punk robot conjuring a picture in the clouds while reading a medieval book in a moonlit, cloudy landscape. Digital Art.